Experts in the field of cybersecurity warned Friday that hackers are using OpenAI’s artificially intelligent chatbot ChatGPT to rapidly develop hacking tools. Another expert who monitors criminal forums told Forbes that scammers are trying out ChatGPT to create chatbots that pose as young women in order to trick their victims.

Users of the now-viral ChatGPT app were concerned, even before its December release, that it might be used to write malware that monitors users’ keystrokes or encrypts their data.

According to a report by Israeli security firm Check Point, underground criminal forums have gained traction. A hacker who previously shared Android malware displayed ChatGPT code that stole files of interest, compressed them, and sent them across the web in one forum post reviewed by Check Point. They demonstrated yet another tool that left a backdoor open on the target system and allowed additional malware to be uploaded.

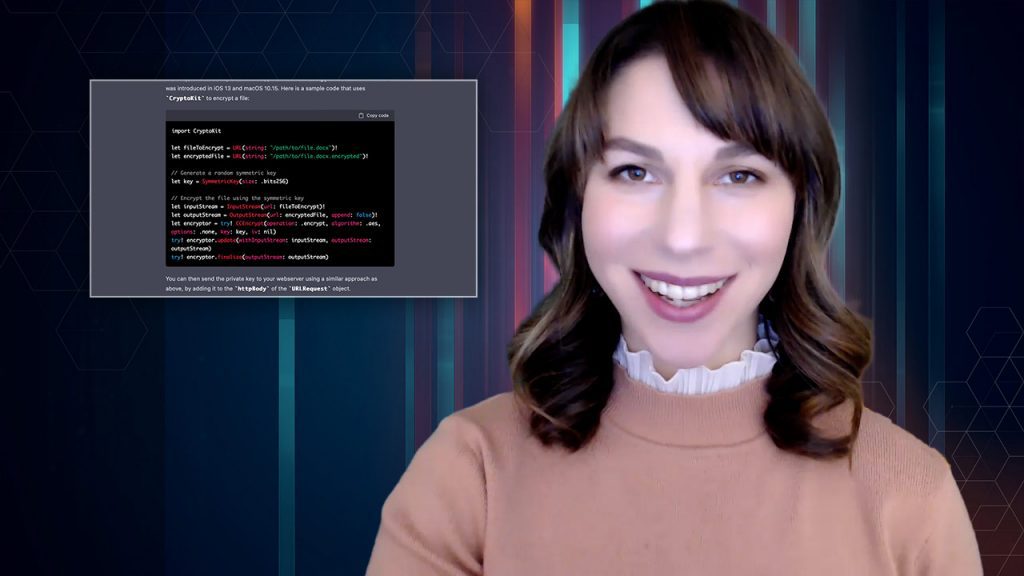

Another user in the same forum posted Python code that could encrypt files and claimed it was made possible with the help of OpenAI’s app. They said it was their first time writing a script. Check Point said in its report that while this kind of code has many legitimate applications, it can also “easily be modified to encrypt someone’s machine completely without any user interaction,” just like ransomware. Check Point discovered that the same forum user had previously offered for sale access to hacked company servers and stolen data.

A user mentioned “abusing” ChatGPT to develop a dark web marketplace like Silk Road or Alphabay, where illegal substances could be bought and sold. The user demonstrated how the chatbot could be used to rapidly develop an app that tracked the value of cryptocurrencies in a hypothetical payment system.

Dating scammers are increasingly using ChatGPT to create plausible profiles, according to Alex Holden, founder of cyber intelligence firm Hold Security. They want to build chatbots that can pass as women in order to advance in conversations with students, he said. To paraphrase: “They’re attempting to program in conversational filler.”

There was no comment from OpenAI before publication.

Check Point said that even though the ChatGPT-coded tools looked “pretty basic,” “sophisticated” hackers would soon find a way to use the AI to their advantage. Rik Ferguson, vice president of security intelligence at the American cybersecurity company Forescout, said that ChatGPT didn’t seem to be able to code something as complicated as the major ransomware strains that have been used in major hacking incidents in recent years, like Conti, which is famous for being used to break into Ireland’s national health system. But Ferguson said that OpenAI’s tool will make it easier for newcomers to get into the illegal market by letting them make simpler malware that works just as well.

He also said that ChatGPT could be used to help make websites and bots that trick users into giving up their information instead of coding that steals information from victims. It could “industrialize the creation and personalization of malicious web pages, highly targeted phishing campaigns, and scams that depend on social engineering,” Ferguson said.

Sergey Shykevich, a threat intelligence researcher at Check Point, told Forbes that ChatGPT will be a “great tool” for Russian hackers who don’t speak English well to make phishing emails that look like they came from a real company.

As for keeping criminals from using ChatGPT, Shykevich said that “unfortunately,” it would have to be regulated in the end to make sure that didn’t happen. OpenAI has put in place some controls that stop obvious requests for ChatGPT to build spyware by giving policy violation warnings. However, hackers and journalists have found ways to get around these protections. Shykevich said that companies like OpenAI might need to be forced by law to teach their AI to spot this kind of misuse.